Quick thoughts on GPT3

OpenAI, an AI research foundation started by Elon Musk, Sam Altman, Greg Brockman, and a few other leaders in ML, recently released an API and website that allows people to access a new language model called GPT-3. I’ve had the chance to play with it over the past few days and have been truly amazed by its capabilities.

I’d like to start this off by stating that, especially amongst my extremely intelligent ML friends, I am quite the layman, so this post is more aimed for a nontechnical audience and I apologize if I make any technical errors in this post.

GPT-3 is essentially a context-based generative AI. What this means is that when the AI is given some sort of context, it then tries to fill in the rest. If you give it the first half of a script, for example, it will continue the script. Give it the first half of an essay, it will generate the rest of the essay.

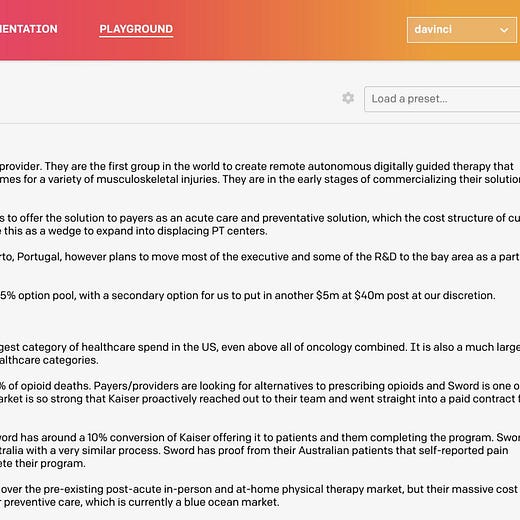

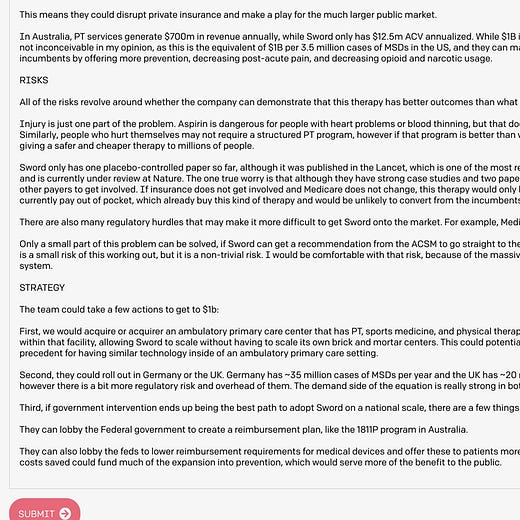

Here are some examples that I tweeted, first where I fed it half of an investment memo I have published on my website:

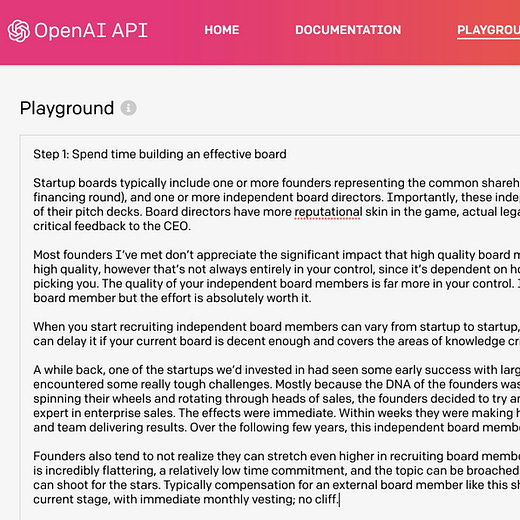

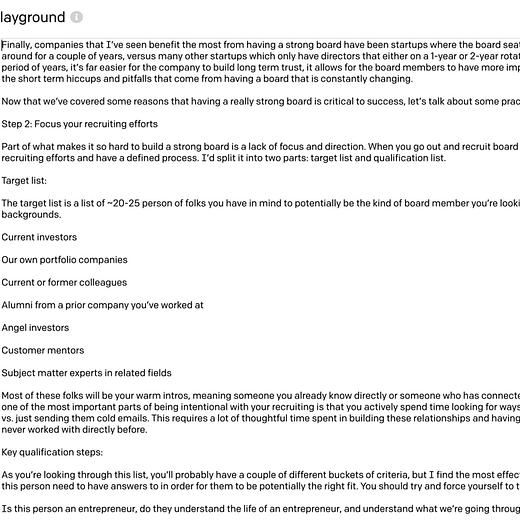

And my second example, where I fed it half of an essay on how to run effective board meetings:

Both times, the AI was able to generate cogent (although not always correct) additional paragraphs, and in both examples was able to follow the prior formatting, i.e. section headers (ex: risk), and steps.

What’s incredible about the tool is you can feed it almost any context -- a script about a gay couple in Italy, an interview between two tech luminaries, or even a political column about an election -- and it is able to put together decently coherent arguments.

Now, before you get too excited, this isn’t some sort of general AI, and the machine doesn’t really have a way of understanding if what it is outputting is true or not. The simplest way to explain how it works is that it analyzes a massive sample of text on the internet, and learns to predict what words come next in a sentence given prior context. Based on the context you give it, it responds to you with what it believes is the statistically most likely thing based on learning from all this text data. This is a strategy that OpenAI and other researchers have been pursuing for quite some time, by starting off with a ‘simple’ problem like trying to predict the next word in a sentence. We have now steadily built up to where they are today, where a model like GPT-3 can complete several paragraphs or more. Though an incredible result, even GPT-3 at some point may lose direction and wander aimlessly. Despite its massive size (over 175B parameters), it still may struggle with keeping a long term destination in mind or holding logical, consistent context over many paragraphs.

This means, in my opinion, although there’s debate on this, that while this tool is very impressive, and GPT-4 will likely show further improvements, there are probably diminishing marginal returns to this approach. They can keep getting better at running really complicated statistics on all of the text people have ever written, but the AI is still not capable of “reasoning”.

There’s a good essay that goes into this to explain that this AI has some limitations in relation to passing the Turing test (http://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html), i.e. if you talk to a AI, can you tell that it’s an AI or not. Remarkably, the easiest way to trip it up is to ask it somewhat nonsensical questions like “how many schnoozles fit in a wambgut?” because statistically, most of the time the AI has seen a question like that on the internet, they are typically answered with a statement that is structured like “3 Xs fit in a Y”, so it answers with “3 schnoozles fit in a wambgut”, rather than a more appropriate answer which would be “those are made-up objects” or “I don’t know”

While there are some limitations, because GPT3 is so good at producing coherent, follow-on thoughts, I think there are a ton of upsides where you include a human in-the-loop as an editor for maintaining correctness.

This tool is phenomenal as a writing buddy. If you’re an playwright setting up a scene, GPT-3 is a great way to explore paths that the scene can take, and it can write dialog, keep track of the characters, and generate dialog that makes sense. If you’re a comic, you start off a funny story about your childhood, and GPT-3 can help you come up with some more riffs. If you’re an entrepreneur trying to increase the productivity of your chat teletherapy company, GPT-3 can probably come up with 3-4 cogent, paragraph-long answers to your users that a human can quickly review and approve, significantly improving their productivity.

There are probably simple use cases where GPT-3 can work entirely on its own, but I think many of the use-cases will involve a human in the loop.

My favorite analogy for explaining GPT-3 is that the iPhone put the world’s knowledge into your pocket, but GPT-3 provides 10,000 PhDs that are willing to converse with you on those topics.

30 years ago, Steve Jobs described computers as “bicycles for the mind.” I’d argue that, even in its current form, GPT-3 is “a racecar for the mind.”

Can’t wait to see what technology entrepreneurs build on this platform and look forward to funding some of them.